| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 | 31 |

- content script

- react

- 캠스터디

- Chrome Extension

- 디스코드 봇

- 프로그래머스 #정수삼각형 #동적계획법

- nodejs

- C언어로 쉽게 풀어쓴 자료구조

- 동적계획법

- 공부시간측정어플

- 자료구조

- supabase

- 크롬 확장자

- discord.js

- 포도주시식

- X

- 파이썬

- TypeScript

- 백준 #7568번 #파이썬 #동적계획법

- 백준 7579

- background script

- 2156

- 크롬 익스텐션

- webpack

- 백준

- Message Passing

- popup

- 갓생

- Today

- Total

히치키치

Clustering Wholesale Customer 본문

Hello everyone, this is team Kihakhak. For our final project, among diverse machine learning algorithm, we decided to focus on unsupervised algorithm, especially clustering.

These are our team members.

While searching for problems to solve using machine learning, we discovered that distribution industry has a lot to consider, such as customers sale, sales item, and etc. Running business with thousands of customers requires deeper understanding of customers, but it is hard to identify important features that has huge impact on customers' decision since there are so many factors. Therefore, we decided to use unsupervised machine learning algorithm to solve this problem and help owners conduct efficient strategy for their customers. To sum up, our project goal is to cluster customers based on spending and analyze whether there is specific characteristic that appear commonly in each clusters.

We used Wholesale customer dataset from Kaggle. There are 8 columns in dataset. First two columns are channel and region.

Each number specifies distribution channel which is divided into HoReCa, which stands for hotel, restaurant, cafe, and retail. Each number specifies location for Regions column. Moving on, other six columns represent annual spendings on Fresh, Milk, Grocery, Frozen, Detergents paper, and Delicatessen.

We came up with three candidate models, which are DBScan, K-means, and Hierarchical Clustering.

Our first candidate DBScan got eliminated because it failed to return accurate result although we preprocessed data as much as we can. As shown above, datas are gathered with similarly high density,, which makes it difficult to separate them into different clusters. With smaller epsilon value, too many outliers are detected and with larger value, all datas are clustered as one.

Now we have two candidates and we decided to use both of them for training because they showed similar performance.

For training we preprocessed data for each model and tried to find appropriate hyperparameter values and ran models. Then, we selected models with best evaluation metric. Then we analyzed the results and tried to overcome limitation for unsupervised learning.

For K-means model, we preprocessed data through log normalization as shown on the left side and replaced outliers as shown on the right.

In order to find optimal hyperparmeter k, elbow method was used. We chose k as 6. And to make sure, we checked silhouette coefficient which also tells us that 6 is the optimal number.

Next, for Hierarchical Clustering, data preprocessing was done in similar method as k-means.

In order to compute distance between clusters, we used each methods and concluded that ward method was the most efficient.

Then, we ran hierarchical model and found out that optimal k is 5 or 6, considering intercluster distance through the plot as shown.

Through silhouette coefficient, we were able to conclude that optimal k is 6 since all the clusters reached average of silhouette score, unlike when k is 5.

When k is 5, although two clusters in boxes are similar in distance, left box is clustered into a different cluster while right box is still in same cluster. This proves that optimal k is 6.

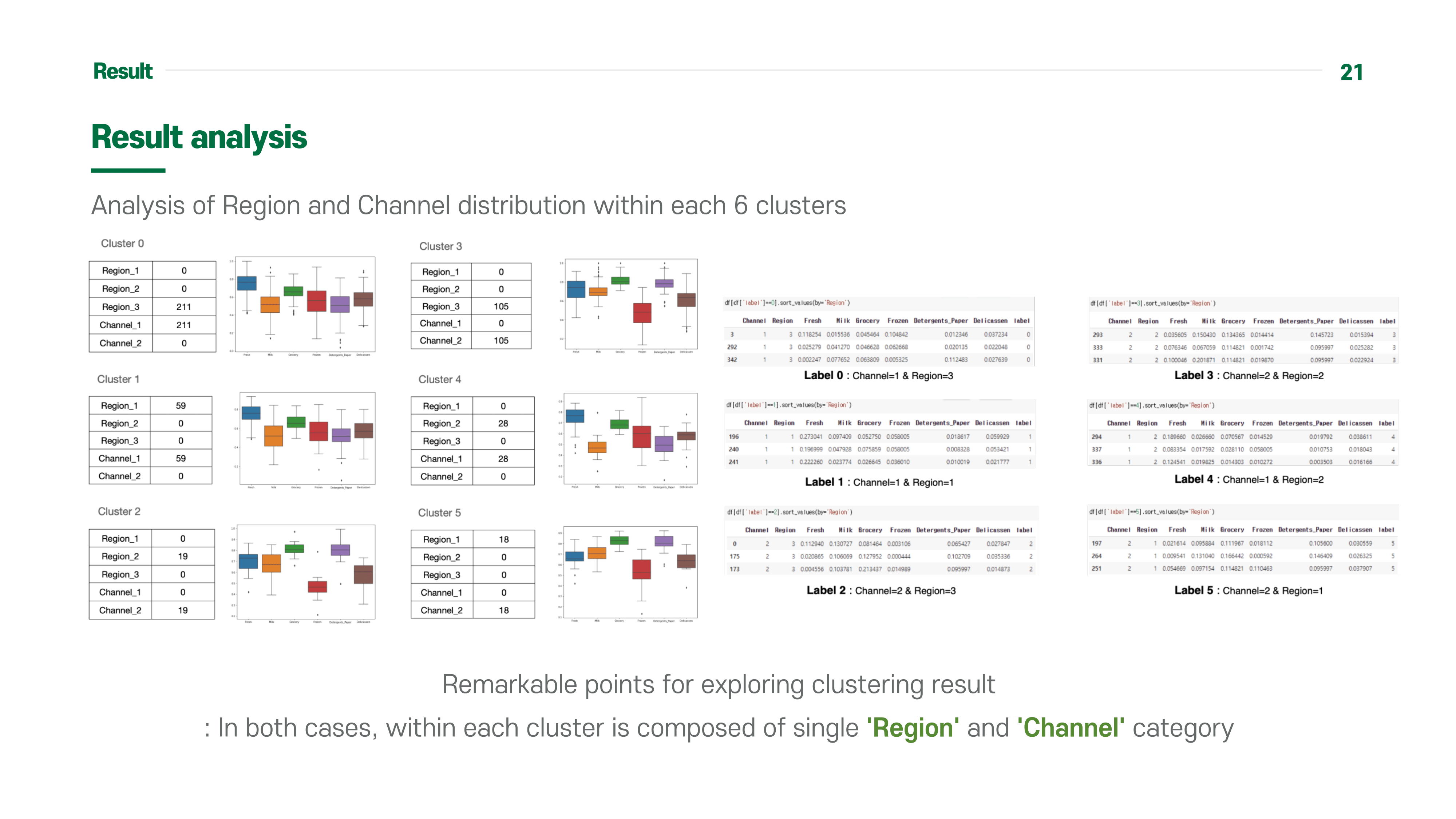

Finally, for clustering result, we concluded that there are 6 clusters for both k-means and hierarchical clustering.

From the result, we were able to figure out a remarkable point, which is that each cluster is divided into cases of region and channel.

However, in order to better explain the result, we used supervised method to reveal black box from unsupervised model. First, we changed the cluster labels to one vs all binary labels and trained classifier to discriminate between each cluster and others. Then, we extracted feature importance from model using Random Forest Classifier.

git : https://github.com/ml-clustering-proj/wholesale-clustering

'AI' 카테고리의 다른 글

| Attention_is_All_You_Need 리뷰 (0) | 2021.03.29 |

|---|---|

| Playing_Atari_with_Deep_Reinforcement_Learning 리뷰 (0) | 2021.03.29 |